In the vast and dynamic realm of the internet, one overarching goal stands paramount for businesses, organizations, and website owners alike: visibility. The ability of your website to be discovered, explored, and accessed by potential visitors and customers is what can make or break your online presence. However, in the complex world of Search Engine Optimization (SEO), the journey to maintaining and enhancing website visibility encounters a pivotal challenge known as de-indexing.

De-indexing is a term that sends shivers down the spines of webmasters, content creators, and SEO enthusiasts. It’s the process by which search engines, with Google leading the charge, decide to remove your web pages or content from their indexes. In simpler terms, when a page gets de-indexed, it’s as if it’s vanished from the vast library of the internet. You can think of it as your book suddenly disappearing from the shelves of a massive, global library – it’s no longer available for anyone to discover.

But why should you care about de-indexing? What’s the significance of this term in the grand scheme of SEO and your website’s success? Let’s dive deeper into these questions as we explore the world of de-indexing and the critical role it plays in ensuring that your online presence doesn’t slip into obscurity.

Table of Contents

What does Deindex mean?

In simple terms, de-indexing is the process through which search engines, with Google being the most prominent player, decide to remove web pages or content from their vast indexes. It’s akin to the digital version of an item vanishing from a library’s catalog, rendering it nearly impossible for anyone to find, access, or benefit from.

Ways to Get De-Indexed by Google

De-indexing by Google can be a website owner’s worst nightmare, but understanding the common causes of de-indexing is the first step in preventing it from happening to your site.

Google Remove Domain from Search Results

One of the most direct ways to get de-indexed by Google is to have your entire domain or website removed from search results. This is a drastic measure that Google takes when it believes your website is in serious violation of its quality guidelines or policies. Such a removal can have a devastating impact on your online presence.

Violating Quality Guidelines: Google has stringent quality guidelines in place to ensure that the content displayed in its search results is relevant, accurate, and user-friendly. Violating these guidelines, such as engaging in deceptive SEO practices or hosting low-quality content, can result in the removal of your entire domain from search results.

Manual Actions: Google’s team of human reviewers may take manual actions against your website if they identify serious violations. These actions can lead to your domain being temporarily or permanently removed from search results. Common manual actions include penalties for spammy content, unnatural links, or other forms of misconduct.

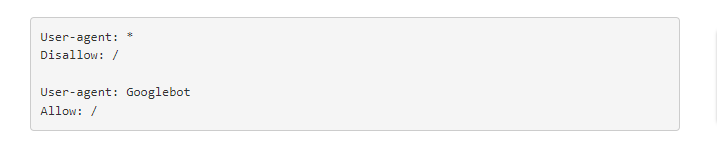

Crawl Blocking Through Robots.txt File

Blocking search engine bots through the robots.txt file is an effective way to get de-indexed by Google. If you restrict access to all or critical parts of your website using the robots.txt file, Google’s bots won’t be able to crawl and index your content, rendering it invisible in search results. This tactic can lead to severe indexing issues and a significant drop in website visibility.

Spammy Pages

Hosting spammy or low-quality pages on your website is a surefire way to invite de-indexing. Google’s algorithms are designed to penalize websites that indulge in spammy practices, such as creating pages filled with irrelevant or nonsensical content. These pages not only fail to add value but can also result in penalties and de-indexing.

Keyword Stuffing

The practice of unnaturally overloading content with keywords in an attempt to manipulate search engine rankings can lead to de-indexing. Google recognizes this as a violation of its quality guidelines and takes a dim view of content that serves the sole purpose of keyword manipulation. Keyword-stuffed content can be de-indexed, causing a loss of visibility.

Duplicate Content

Having substantial portions of duplicate content across your website can result in de-indexing. Google aims to provide diverse and unique results to its users, so it penalizes websites that engage in duplicate content practices. De-indexing may occur to eliminate redundancy and improve search results.

Auto-Generated Content

Content that is automatically generated, often without human intervention or quality control, can lead to de-indexing. Google places a premium on content that provides value and relevance to users. Auto-generated content often fails to meet these criteria, making it a prime candidate for de-indexing.

Cloaking

It is the practice of displaying different content to users and search engine bots, often in an attempt to deceive search engines. When Google identifies cloaking, it typically takes swift action, including de-indexing the offending pages. This practice undermines the integrity of search results and can result in severe penalties.

Sneaky Redirects

Employing sneaky redirects to different or unrelated websites, often without user consent, can lead to de-indexing. Google aims to provide users with accurate and relevant search results, and using redirects to deceive users or manipulate search rankings is a direct violation of its guidelines.

Phishing and Malware Setup

If your website is involved in phishing activities or hosts malware, it poses a significant threat to user security. Google takes such threats very seriously and is likely to de-index websites that engage in these harmful practices to protect users from potential harm.

User-Generated Spam

Allowing users to generate and post spammy or irrelevant content on your website can lead to de-indexing. It’s essential to maintain quality control and monitor user-generated content to prevent spam and ensure that your site remains a reputable source of information.

Link Schemes

Engaging in manipulative link schemes, such as buying or selling links to influence search rankings, is a violation of Google’s guidelines. Such practices can result in penalties, including de-indexing, as Google aims to maintain the integrity of its search results.

Low-Quality Content

Hosting a large amount of low-quality, thin, or irrelevant content can lead to de-indexing. Google’s algorithms aim to promote high-quality content and penalize websites that fail to provide value to users.

Hidden Text or Links

Hiding text or links by making them the same color as the background or using techniques to make them invisible to users but still detectable by search engines is against Google’s guidelines. This practice can lead to de-indexing if detected.

Doorway Pages

Doorway pages are created specifically to rank for particular keywords and then redirect users to other pages. Google considers this practice manipulative and often takes action, including de-indexing, to prevent users from landing on irrelevant pages.

Scraped Content

Publishing scraped or copied content without proper attribution or value-added modifications can lead to de-indexing. Google aims to reward original and unique content while penalizing duplicated or unoriginal material.

Low-Value Affiliate Programs

Websites that predominantly serve as conduits for low-value affiliate programs without adding substantial value to users can face de-indexing. Google expects websites to offer original and valuable content.

Poor Guest Posts

Hosting poorly written, irrelevant, or spammy guest posts can result in de-indexing. Google encourages websites to maintain high-quality standards for guest posts and penalizes those who fail to do so.

Spammy Structured Data Markup

Using structured data markup in a spammy or deceptive manner can lead to de-indexing. Structured data is meant to provide search engines with clear information about the content on a page. When it’s manipulated for the purpose of tricking search engines or misleading users, it violates Google’s guidelines and can result in de-indexing.

Automated Queries

Excessive and automated querying of Google’s search engine can result in temporary or permanent IP bans. Engaging in automated queries not only strains Google’s resources but also violates its terms of service, making it a surefire way to get de-indexed.

Excluding Webpages in Your Sitemap

While sitemaps are useful for helping search engines discover and index your content, deliberately excluding crucial webpages from your sitemap can lead to de-indexing. Google expects sitemaps to reflect the content on your website accurately, and any manipulation may result in penalties.

Hacked Content

If your website is hacked and serves malicious or irrelevant content to users, Google may temporarily de-index your website to protect users from potential harm. It’s crucial to promptly address security issues to avoid de-indexing.

Avoiding Search Engine Penalties

In the dynamic landscape of the internet, keeping your website indexed and free from search engine penalties is a top priority. Penalties can have a detrimental impact on your website’s visibility, potentially leading to de-indexing. So, how can you steer clear of these pitfalls and maintain your website’s favorable standing in the eyes of Google and other search engines?

Adherence to Quality Guidelines

The cornerstone of avoiding search engine penalties is adherence to quality guidelines set by search engines, with Google being the primary player. These guidelines are designed to ensure that websites in search results offer a valuable and user-friendly experience. Here’s how you can stay in compliance:

Provide Valuable Content: Craft high-quality, informative, and original content that caters to the needs of your target audience. Ensure that your content is accurate, up-to-date, and free from plagiarism.

Ethical SEO Practices: Avoid deceptive SEO practices such as keyword stuffing, cloaking, and link schemes. Instead, focus on ethical SEO techniques that enhance user experience and accessibility.

Responsive Web Design: Ensure that your website is mobile-friendly and responsive. A well-structured and user-friendly design is crucial for providing a positive user experience.

Google Algorithms

Google algorithms are continually evolving, and staying ahead of these changes is vital to avoid penalties. Google’s algorithms, such as Panda, Penguin, and BERT, aim to reward websites that offer value while penalizing those that violate guidelines.

Content Updates: Regularly update your website’s content to keep it fresh and relevant. As Google’s algorithms favor up-to-date information, this can help maintain your indexing and ranking.

Keyword Research: Stay up-to-date with the latest keyword trends and adjust your content to align with search intent. Keyword research tools and analytics can assist in this process.

Webmaster Tools

Utilizing Webmaster tools, such as Google Search Console, is a proactive step to ensure the health of your website. These tools offer insights into how Google views your site and can help you identify and address potential issues.

Regular Audits: Conduct regular website audits using Webmaster tools to identify technical issues, crawl errors, and security concerns. Addressing these issues promptly can prevent de-indexing.

Request Reviews: If your website incurs a manual penalty from Google, you can use Webmaster tools to request a review after addressing the issues that led to the penalty.

Managing De-Indexing Issues

When the specter of de-indexing looms, it’s crucial to have a clear strategy in place for effectively managing these concerns. In this section, we’ll explore strategies that will help you navigate de-indexing issues and ensure your website remains visible and accessible.

Regular Content Audits

A proactive approach to managing de-indexing is conducting regular content audits. By evaluating the quality and relevance of your website’s content, you can identify potential issues that might lead to de-indexing.

Website Deindexed: The nightmare of having your entire website de-indexed can often be avoided by regularly reviewing your content. If you spot low-quality or irrelevant content, consider removal, improvement, or consolidation.

Deindexed Websites: Learning from the experiences of other webmasters who’ve had their websites de-indexed is invaluable. Study their cases and understand the reasons for their de-indexing to prevent similar pitfalls.

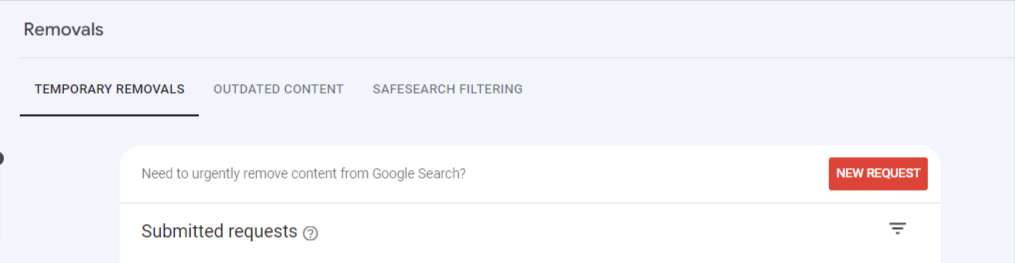

Content Removal

When it comes to managing de-indexing concerns, sometimes you have to make difficult decisions regarding specific content. If you identify content that’s either outdated, low-quality or in violation of guidelines, content removal might be necessary.

Content Removal: Removing content that no longer serves its purpose is a strategic move. It not only helps prevent de-indexing but also ensures that the information you provide is always up-to-date and relevant.

Redirects: If you remove content, consider implementing proper redirects to guide users to alternative, relevant pages on your site. This ensures a seamless user experience and minimizes potential negative impacts.

Optimizing SEO for Website Visibility

Now that we’ve discussed how to manage de-indexing concerns, let’s explore how SEO optimization plays a pivotal role in maintaining and enhancing website visibility.

SEO Optimization

SEO optimization is the cornerstone of your website’s online presence. It encompasses a range of strategies and practices aimed at improving your website’s ranking in search results.

Keyword Research: Start by conducting thorough keyword research to identify the terms and phrases your target audience is searching for. By aligning your content with these keywords, you increase the likelihood of your website appearing in relevant search results.

Content Enhancement: Continuously enhance your content to provide value and maintain relevance. Well-structured, informative, and engaging content not only attracts visitors but also keeps them coming back.

User Experience: A crucial aspect of SEO optimization is user experience. Ensure that your website is user-friendly, mobile-responsive, and easy to navigate. These factors contribute to a positive user experience, which, in turn, affects your website’s visibility.

Website Visibility: The ultimate goal of SEO optimization is to improve website visibility. When your website ranks higher in search results, it becomes more visible to potential visitors and customers, ultimately leading to increased organic traffic.

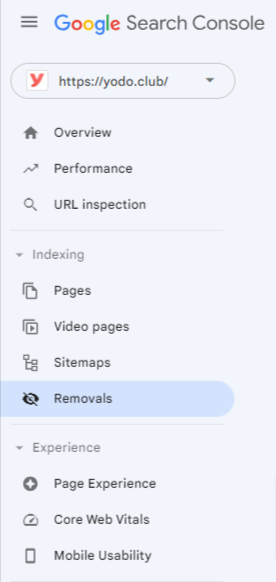

Utilizing Google Search Console

In the complex world of website management and SEO, one tool stands out as an invaluable ally – Google Search Console. Understanding its importance is crucial when it comes to managing indexing and ensuring that your website maintains its visibility. Let’s explore the significance of Google Search Console and how it can assist in addressing indexing issues.

Google Search Console: A Vital Ally

Google Search Console is a suite of tools provided by Google that allows webmasters and website owners to monitor, analyze, and maintain their website’s presence in Google’s search results. It’s an essential resource that provides valuable insights into how Google views your site, including what pages are indexed and how they perform.

Indexing Issues: One of the primary concerns that Google Search Console can help you address is indexing issues. If you notice that some of your pages are not indexed or that there are pages indexed that shouldn’t be, this tool provides a window into understanding why.

Key Functions of Google Search Console:

Let’s explore some key functions of Google Search Console that make it a vital resource for website owners:

Crawl Data: Google Search Console offers information about how Google’s bots crawl your website. This includes details about crawl errors, crawl statistics, and what Googlebot sees on your site. Understanding these aspects is crucial to prevent indexing issues.

Index Coverage: This section of Google Search Console provides insights into how your website’s pages are indexed. It helps you identify issues that might lead to de-indexing and take corrective action.

Mobile Usability: With an increasing number of users accessing the web via mobile devices, the mobile usability report helps ensure that your site is mobile-friendly, contributing to positive user experiences and search ranking.

Security Issues: Security is a significant concern for website owners. Google Search Console notifies you of potential security issues that might lead to de-indexing, such as malware or security vulnerabilities.

Request Indexing

A standout feature of Google Search Console is the ability to request indexing for specific pages. If you’ve made updates to your content or addressed indexing issues, this feature enables you to notify Google to re-crawl and re-index those pages promptly.

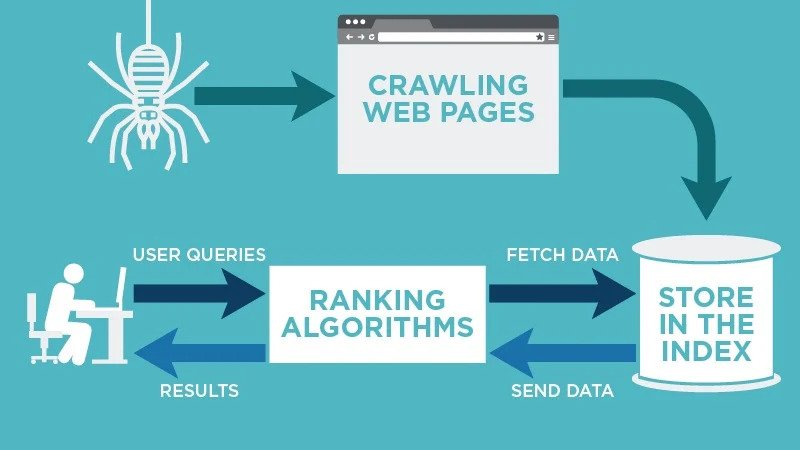

The Crawl Process in SEO

To truly understand how search engines like Google index your website, you need to be familiar with a fundamental process: the crawl process in SEO. It’s the unseen yet crucial mechanism that allows search engines to discover, analyze, and index web pages.

Crawl in SEO: What Is It?

The term crawl in SEO refers to the process where search engine bots, often called spiders or crawlers, systematically navigate through the vast landscape of the internet. These bots act as digital librarians, visiting web pages, scanning their content, and collecting data. They play a pivotal role in the indexing process that eventually determines how a webpage appears in search results.

Let’s break down the key components of the crawl process and its significance in SEO:

Discovering New Pages: Search engine bots start by discovering new web pages. They do this by following links from one page to another. As they move from page to page, they encounter links, click on them, and explore new corners of the internet.

Analyzing Content: Once a bot arrives at a web page, it thoroughly analyzes the content. It examines text, images, videos, and any other elements that make up the page. This analysis helps search engines understand the topic and relevance of the content.

Collecting Data: As the bot analyzes a page, it collects data about various elements, such as meta tags, headings, keywords, and more. This data is later used to determine how the page should be indexed and ranked.

The Importance of the Crawl Process

Understanding the crawl process is vital because it directly impacts your website’s visibility. If a search engine bot can’t crawl your pages effectively, your content may remain hidden from search results.

-

- Indexing: After crawling, search engine bots make decisions about whether a page should be indexed or not. Pages that meet the quality criteria and provide value are typically indexed, making them available for searchers to discover.

- Ranking: The data collected during the crawl process also influences how a page is ranked. Search engines use this data to determine the relevance and authority of a page in relation to specific search queries.

- Frequency of Crawling: The frequency with which a bot crawls your website can vary. High-quality and frequently updated sites tend to be crawled more often, ensuring that the latest content is reflected in search results.

Frequently Asked Questions

1. How long does Google take to Deindex?

The time it takes for Google to de-index a webpage can vary. In some cases, it can happen relatively quickly, particularly if Google identifies serious violations of its guidelines. However, the exact duration can depend on several factors, including the severity of the issue, how frequently Google crawls your site, and whether manual actions are involved. It’s essential to address de-indexing issues promptly and work to resolve the root causes to expedite the process of re-indexing.

2. How do I remove SEO from Google?

You cannot remove SEO (Search Engine Optimization) itself from Google, as SEO is a set of practices aimed at optimizing your website’s visibility in search results. However, if you wish to de-optimize or de-emphasize your website’s presence in Google’s search results, you can take steps like removing or no-indexing pages, disavowing backlinks, and minimizing keyword optimization. Keep in mind that a more effective approach may be to optimize your SEO strategies for the outcomes you desire.

3. Why is Google not indexing?

There can be several reasons why Google is not indexing a particular webpage or website:

-

- Technical Issues: Technical problems on your website, such as robots.txt blocking, meta tags instructing noindex, or server issues, can prevent indexing.

- Quality Guidelines Violations: If your content violates Google’s quality guidelines, it may not be indexed.

- Low Quality or Value: If your content offers little value or relevance, Google may not index it.

- Crawl Errors: Crawl errors, like broken links or inaccessible content, can hinder indexing.

4. Is Google indexing free?

Yes, Google indexing is generally free. Google’s search engine crawls and indexes websites without charging website owners for the process. However, the cost of achieving high visibility in Google’s search results through practices like search engine optimization (SEO) and paid advertising may require investments in time and resources. The indexing process itself, though, is a free service provided by Google.

5. How to use Googlebot?

Googlebot is Google’s web crawling robot, and you don’t directly control its actions. However, you can influence how Googlebot interacts with your website in the following ways:

-

- Ensure your website is accessible and user-friendly.

- Use a robots.txt file to specify which parts of your site Googlebot can crawl.

- Implement proper HTML markup and structured data to help Google understand your content.

- Optimize your website for speed and mobile-friendliness to provide a positive user experience.

& Passion.

& Passion.